Micro-tasking for Tissue Image Labelling

Product Designer, User Researcher

Jan 2020 - August 2020

9 Months

Design brief

During my time PathAI, I was tasked with envisioning the design strategy for the Microtasking platform to collect labels/annotations to support machine learning (ML) model development.

I was the lead designer for the platform responsible for the end to end product design. My responsibilities included requirement gathering, defining the problem space, user research, interaction design, visual design, copy design, and usability testing with board-certified pathologists.

In this case study, I will deep dive into the task dashboard experience that our expert pathologists interacted with to label tissue samples. These labels play a vital role in evaluating and improving ML algorithms that fuel the AI developed by PathAI.

This experience was launched in Sep 2020 (~4yrs since its first release) and has led to an improved user experience for the users. The overarching design problem was –

How might we improve the information hierarchy and layout of the annotator dashboard to improve engagement.

Available Tasks on dashboard - card and list view

What do we mean by annotations?

Every ML model at PathAI needs to be "taught" how to identify biological substances on a pathology slide. Annotations or labels are a layer of information on top of the raw data (Microscopic Pathology Slide) that help ML models identify what the tissue sample actually means in terms of diagnosis (as identified by an expert pathologist).

Annotations are important for the business because they are the "fuel" for our ML models, if the ML models was a "car". Thus, for a car to function as desired, the quality of the fuel must be acceptable to avoid any breakdowns. Similarly, the quality of annotations or labels will determine the outcomes of our ML algorithms, making it critical for the business.

Zoomed out view of Non-Alchololic Steatohepatitis (NASH) tissue

Zoomed in view of an annotated "Frame" (Algorithm identified subsection of slide)

Maximum zoom showing the annotated (red polygons) nuclei (membrane bound organelle containing genetic materials like DNA )

Who is the Annotator persona? What are their motivations?

Illustration by Design Intern Chorong Park

Our annotators are board certified pathologists who provide their expertise to PathAI, one of which is in the form of annotating/labelling our pathology slides.

They have various motivations to work with PathAI Including,

-

Flexible and remote nature of the jobs

-

Keeping up-to date with the upcoming technologies in their field

-

Comparable hourly pay with their day jobs

-

Being part of something bigger and transformative in AI powered pathology

End-End Annotation Task workflow

In this section, I illustrate the complete workflow of the annotation platform and various personas interacting with the system. In addition to the core contributing pathologist, our eco-system includes –

-

Scientific Program Manager – Responsible for defining, tracking, managing scientific ML model development services for Pharma Clients

-

Internal Pathologist – Internal PathAI pathologists who provide their pathology expertise throughout model development projects

-

Machine Learning Engineers – Responsible for developing algorithms that identify specific biomarkers to in pathology slides

-

Community Experience Manager – The liaison between our internal stakeholders and external annotator pathologists. Responsible for overall health and relationships between PathAI and our pathologist network

Workflow Laying out the the various users/stakeholders and their interaction points

In the workflow you can see that there are three key flows –

1. Internal stakeholders define the task definition based on requirement – create, assign a task

2. The annotator pathologists will receive this task – work on it – submit task – receive payment and feedback (if any)

3. The results of the task will be used for ML model development by MLEs and might result in revised versions of the same task or an entirely new task

This case study dives deep on these key use cases on step '2' –

-

If an annotator pathologist logs into the platform they have to make a decision on which task they should do (if at all)

-

If they have completed a task, what is the status on it's payment

-

If they want to know what type of tasks they can expect from PathAI in near future and can plan their time accordingly

With this premise, let's revisit our design problem –

How might we improve the information hierarchy and layout of the Annotator dashboard to improve engagement.

Previous Dashboard Design

Let's understand why a redesign of the dashboard was important for the user and the business.

The previous dashboard had some known pain points including –

1. The dashboard was not easy to scan for the most important information. There was scope to improve the layout and IA.

2. All tasks did not have similar visual treatment on the dashboard. There was the confusing concept of "Active" task and "Non Active Task". This was counter intuitive to user behavior. We learnt from research that users might "test drive" a few slides before deciding to complete the entire slide.

3. Important information like payment details was buried deep in task instructions. We would often get customer queries regarding "payment details" before and after completing an annotation task.

4. Tasks that the user could no longer work on also showed up on the dashboard taking up precious space.

Design

The design process for this project involved both strategic planning and tactical delivery in the agile sprint cycle. I used some common design patterns from our design system (Anodyne 2.0) for visual alignment with other PathAI products.

The design process was highly collaborative and iterative with the product, eng. team and the design team. I regularly organized and led a co creation design jam (remotely) with our engineering team to get their design inputs early in the design process.

In addition to internal feedback, I also benchmarked other microtasking products like Amazon Mechanical Turk, User Testing.com, task management products like GitHub, Jira, Monday.com and Airtable, and data labelling platforms like Centaur Labs platform.

Some of my key takeaways from secondary research included -

1. Centaur Labs app uses medical images and tags that makes the card visual and easy to scan.

2. Amazon M Turn uses overview and earnings on their home page for turkers

3.User testing.com highlights the $ amount and the device the user needs (pre requisite). It also has screener tasks that can "qualify" you if meet the criteria.

4.Use color sparingly, only to highlight something of importance.

Secondary Research : 1. M Turk, 2. User Testing.com, Monday.com, Jira, GitHub

Design Brainstorm with the squad

Interaction and Visual Design

One of the most important element on the task dashboard was the task "card" or task "row" (in table view). Below you will see various explorations for this card and its final design and information layout.

Task card design and explorations.

Dashboard Screens:

Quick view, filtered by task status

-

AvailableTasks: are all tasks that are available for the annotator to do

-

Completed Tasks: are all completed by annotator that are either under review or payment has been processed for them

-

Upcoming Tasks : Tasks that are in the pipeline, to help the annotator anticipate upcoming work from PathAI

Available, Complete and Upcoming Tasks

View Task instructions + Contact Support

Tablet Experience

Components, variants and screens

Designing for Scale

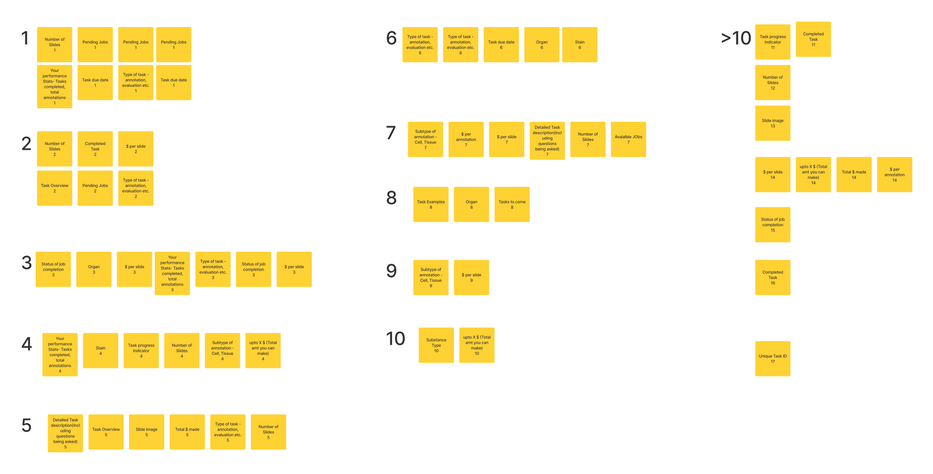

One of the key design principles for this dashboard experience was to think about the future use cases and scenarios that will help PathAI scale for 10X more annotation tasks in future.

1. Number of Tasks

The first use case includes thinking about how might the task dashboard evolve as the number of tasks increases as we move towards more micro tasks with more data.

To address for this, I designed the task in the form of a table that could allow for advance sorting, filtering in future. It would also enable the user to see a larger number of tasks above the fold along with pagination.

2. Type of Tasks

One of the type of tasks we have been exploring is a "qualification task", which is a task that is sent to an annotator before the actual job to asses their ability to annotate a particular biomarker.

User Research

Visual styles for the card exploring the qualification task as a special task

Concept exploring "locked tasks", which can be unlocked after doing qualification tasks

Additional Concept Explorations

Here I explore two concepts that did not make it to the final design for V2 but are worth discussing –

-

User Stats and impact: How can we motivate the user by showing them their performance so far and how does that fit into the big picture of model development.

-

Gamification and social performance as motivators: Data labelling can be a highly mundane, repetitive and laborious task for a pathologist. The hypothesis for the concept was that adding some fun elements of gamification might improve user engagement on the product.

Additional Concepts 1. How might we share "Annotator Stats and their impact on the dashboard" 2. How might we use concepts of gamification and social motivation to increase engagement

User Research

When I joined the Annotation platform, the platform had been without a designer and project manager for more than eight months. I teamed up with the project manager on the squad to answer some broad questions through a qualitative user study as a follow up to a quantitative quarterly survey-

Goals and Methodology

What is the most important information and actionable information to the pathologist annotators on the annotation dashboard.

Participants Demographics

-

Research was remote moderated 60 min sessions with 7 Annotator Pathologists and 1 Internal Pathologist (pilot study)

-

Participants were a mix of active/inactive contributor Pathologists on the platform.

The most salient needs identified in this session were –

-

Most users would like to see how many slides/tasks are available for them so that they are able to plan their time accordingly.

-

They want to see the type of annotation/label task so that they can understand the difficulty of the task, time it will take to complete the task.

-

Users want to see a due date so that they know if they have enough time to start the task or let it go.

User Research in Action

Measuring Impact

Measuring impact of a design change is important. However, at the time, PathAI had not enabled metrics to help us accurately and easily track experience metrics.

Thus, I sent out an experience survey two weeks after the release of the feature to measure the impact and capture any additional feedback from our users.

Here are some screen grabs of the survey that was sent to our Pathologist Network. We received 33 responses that captured quantitative and qualitative feedback on this experience.

Overall, most users had really positive feedback. Below are some user verbatim –

What users liked the most –

Easy Navigation

"Much simpler navigation; availability of slides completed/left in each task

Easier to assess tasks without having to open each one. More choices fit on the screen. Easier to track progress on tasks."

Clarity of Information

"Clear and concise and know how many slides available total for your to work with.

much easier to navigate. drawing tool much improved"

"I can plan better and manage my time knowing the number of slides in each task"

Engaging and Visual

"It's more colorful, organized, and engaging. I like that the payment per task is clearly displayed without having to open each task. Also, the progress (i.e. 0 out of 20 slides completed, etc.) is very helpful so I know the goal and so I can decide where to take breaks or complete the entire task."

"I like having the overlay colors on the screen and the currently selected cell type on the screen, easy to visualize, Tells you how many slides are left"

"It is more visual, listing all of our previous tasks the layout, Easy to use

Seeing how many slides are available to complete"

Task Progress

"I like knowing what status projects are in and knowing how many are available/how far I am into them."

"The Complete tab is very helpful for tracking project status"

Slide Availability

"Knowing how many total slides are available"

"It says that how many is assigned to me."

"The most I like is knowing how many total slides are available for each task.

Seeing all my outstanding tasks at once, including the ones I have completed. Also, seeing the total number of slides available to review on a project"

"That you can see the total number of slides pending."

"Number of slides in the task. The number of slides available."

"Ability to know the number of available slides

"I like to see how many tasks are left. Knows how many still available"

"I now know how many slides are available for each task."

Task Availability

"It's easier to see what assignments are available, as well as keep up with how many are done and what's available. Allows me to estimate the time that may be required to do the tasks."

"That is shows how many remaining tasks are available"

"Very easy to see what tasks are available and how many slides remain"

What users would like to see improved –

Sticky older tasks occupying screen space

"That older tasks that were for training keep showing on the dashboard. These take a higher position and you need to scroll down on the screen to get to more current tasks."

Technical Limitation which is confusing the users

"That it is not immediately clear if there are still tasks available (a task will continue to appear on the "available" tab, but there are zero slides available)"

"When job is done, still in there ( but listed as complete). Should only put those available not complete jobs there."

"I wish it was a little easier to tell which tasks are still available vs. the ones with 0 slides left."

Improve sorting and filtering

"inability to sort - why are the training tasks I did presented at the beginning? The default should be in chronological order from most recent to oldest, so that training tasks should be at the end"

Experience survey and results

Reflection

PathAI was the smallest company and design team I have worked for and I believe that in a short time I learnt a lot. Here are some learnings from this project –

-

Always take your team on the design journey when working on a project so that they understand why is that project important for the user. For this project, I involved the engineering team in the design process in brainstorms. I think this was key to get overall excitement in the team and stakeholders.

-

Share user findings and stories with your engineering counterparts. Design is a very collaborative process in the tech industry

-

Iterate + Seek Feedback: I know I don't always have the answers and I seek inspiration and feedback from users and internal peers and stakeholders in design, engineering and product peers.

-

Doing things the right way in a startup is hard, not always will you have the buy in or resources. I think I learnt resilience and persistence as a life lesson for this project.

Design responsibilities:

System design, UI design, UX design, User research (Qualitative and quantitative)

Tools used:

Design: Figma

Research: Zoom, Dovetail, Airtable, G Forms